Computer and Information Science

Associates Degree (050.3)

Program assessment results and planned curricular changes

Students in CIT-111 Fall of 2017 completed a two-task instrument assessing the state of their foundational programming skills. This document describes the results of this administration and outlines plans for course improvement based on those results.

Jump to a Task 1 section

Jump to a Task 2 section

Task 1 Results: Implementing flow chart logic in Java

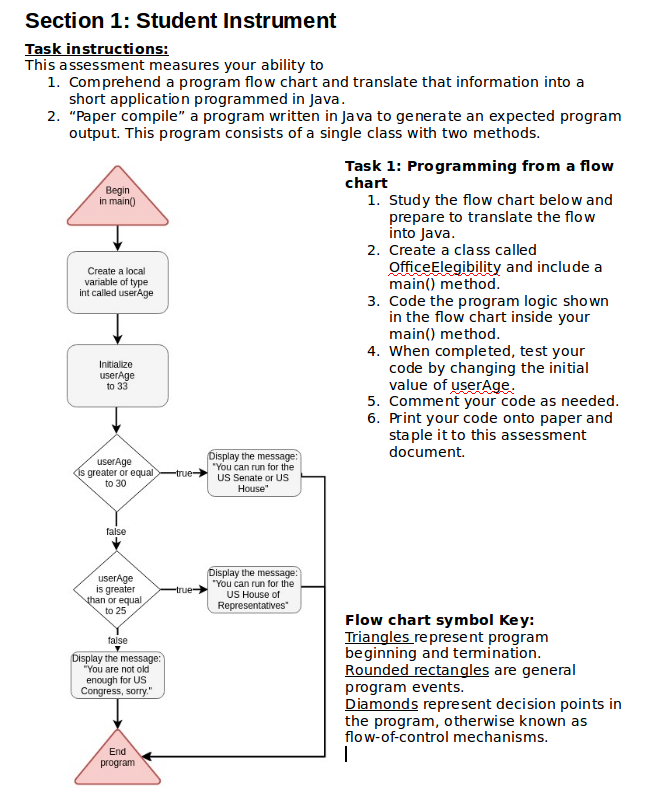

Task instrument

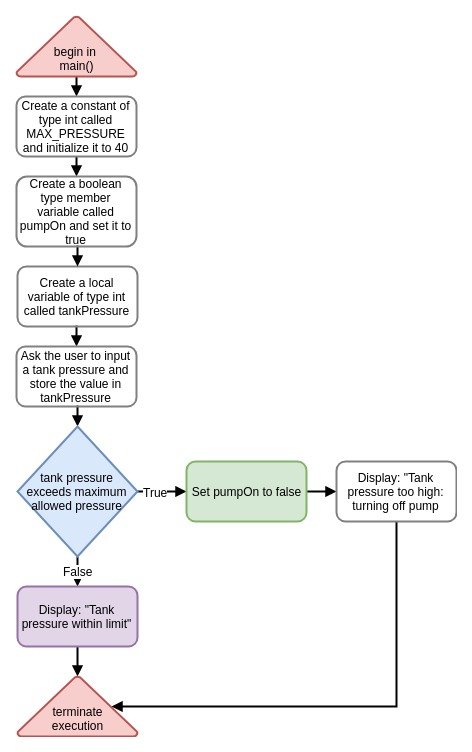

Task 1 asked students to write an application capable of determining the user's eligibility for the US Senate and US House of representatives. The decision logic is described in a flow chart composed of commonly used symbols for program initialization, decisions, and other events.

Knowledge and skill requirement breakdown

The following table contains curriculum-level learning objectives which directly map to the skills assessed in task 1.

| Learning Unit | Relevant Objective | Manifestation in task 1 |

|---|---|---|

| Program Design | Students will be able to (SWBAT) digest a program flow chart and determine the appropriate structures in Java code to implement the model | Task 1 provided a flow chart with 9 related components containing two decision structures and the required results based on the decision outcome |

| Program structure | SWBAT create simple executable program in Java containing an appropriately-named class, a main() method, and application code | OfficeElegibility in its most straightforward implementation requires only one Java class and only one method, the main() method |

| Language Essentials | SWBAT create primitive-type variables and manipulate their values with simple binary operators | The OfficeEligibility application required the creation of an int type variable representing the user's age. This value was used in decision structures to control program execution. |

| Control of flow | SWBAT create program execution paths using if/else-controlled blocks of code which implement specified decision logic | The user's age in OfficeElegibility was checked against specified age constants that correspond to constitutional minimum age requirements for holding federal office in the US Congress |

Scoring rubric

The following scoring rubric serves as a guide for determining student scores on completed assessment instruments. Each row specifies the criteria on which to award a single integer score of 0 through 4 on each completed instrument. Scores correspond to the subjective proficiency categories listed in the table below.

Note the progression of the criteria from a score 1 through a score 4. The rigor of a score of 3 corresponds to a mastery of each of the objectives listed in the previous section. A score of 4 is subjectively labeled "advanced" proficiency and requires the same level of object-related proficiency as a score of 3 and one or more features of the Java code submitted which reveal increased attention to broader design-related considerations.

| Proficiency Level | Scoring criteria |

| 0 – Not attempted | No Java code was attached to the assessment or the code was in no ways related to the flow chart |

| 1 – Insufficient skills |

|

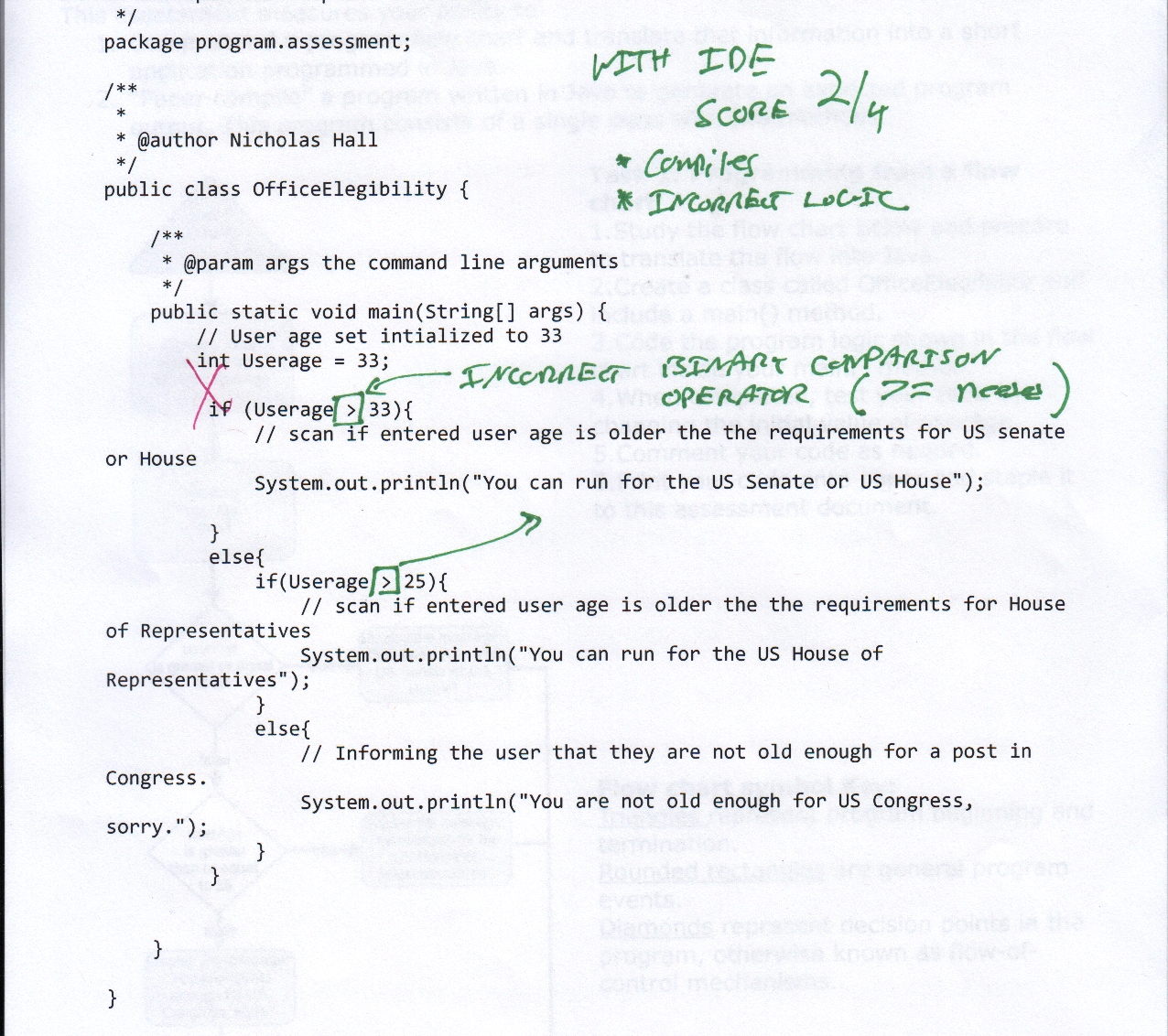

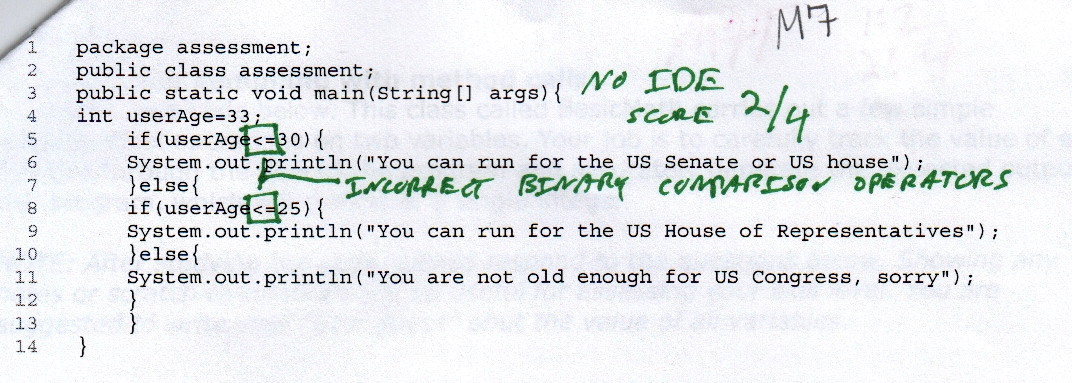

| 2 – Below proficient |

|

| 3 – Proficient |

|

| 4 – Advanced |

|

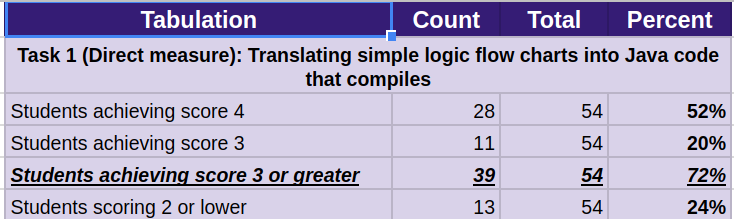

Aggregated assessment instrument results

The instrument was administered to 54 students across 3 sections of CIT-111: Introduction to Programming with Java. 72% of students scored a 3 or a 4 on the scoring rubric presented in the previous section. This suggests that an acceptable majority of students have mastered the objectives the instrument was designed to assess given the established threshold of 70% scoring 3 or above.

In tabular form, the results are as follows:

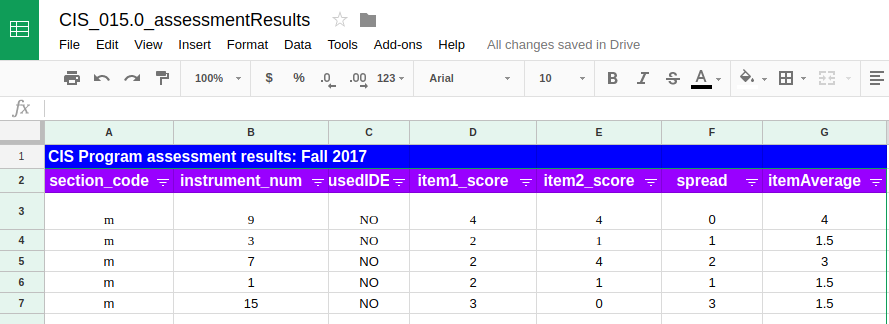

You can view and download the student-level assessment data by accessing this shared google drive spreadsheet:

Student work artifacts by score

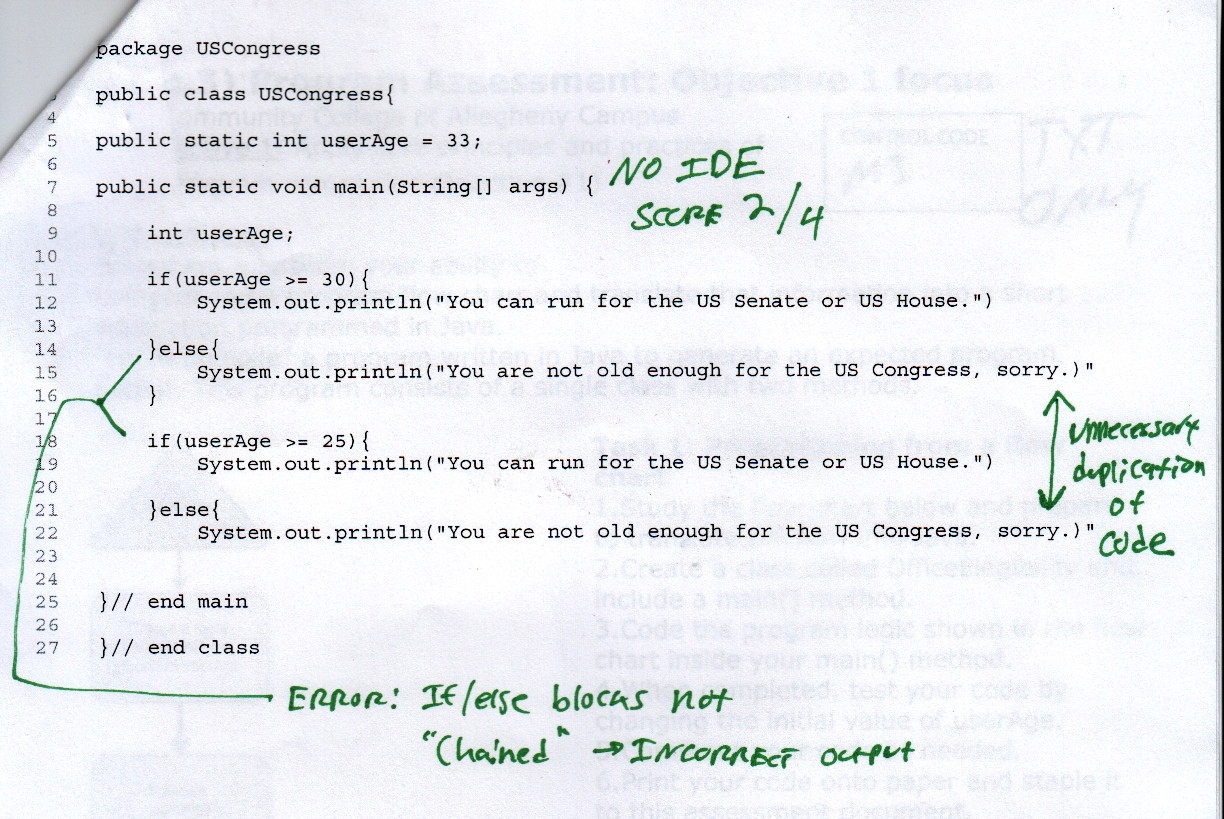

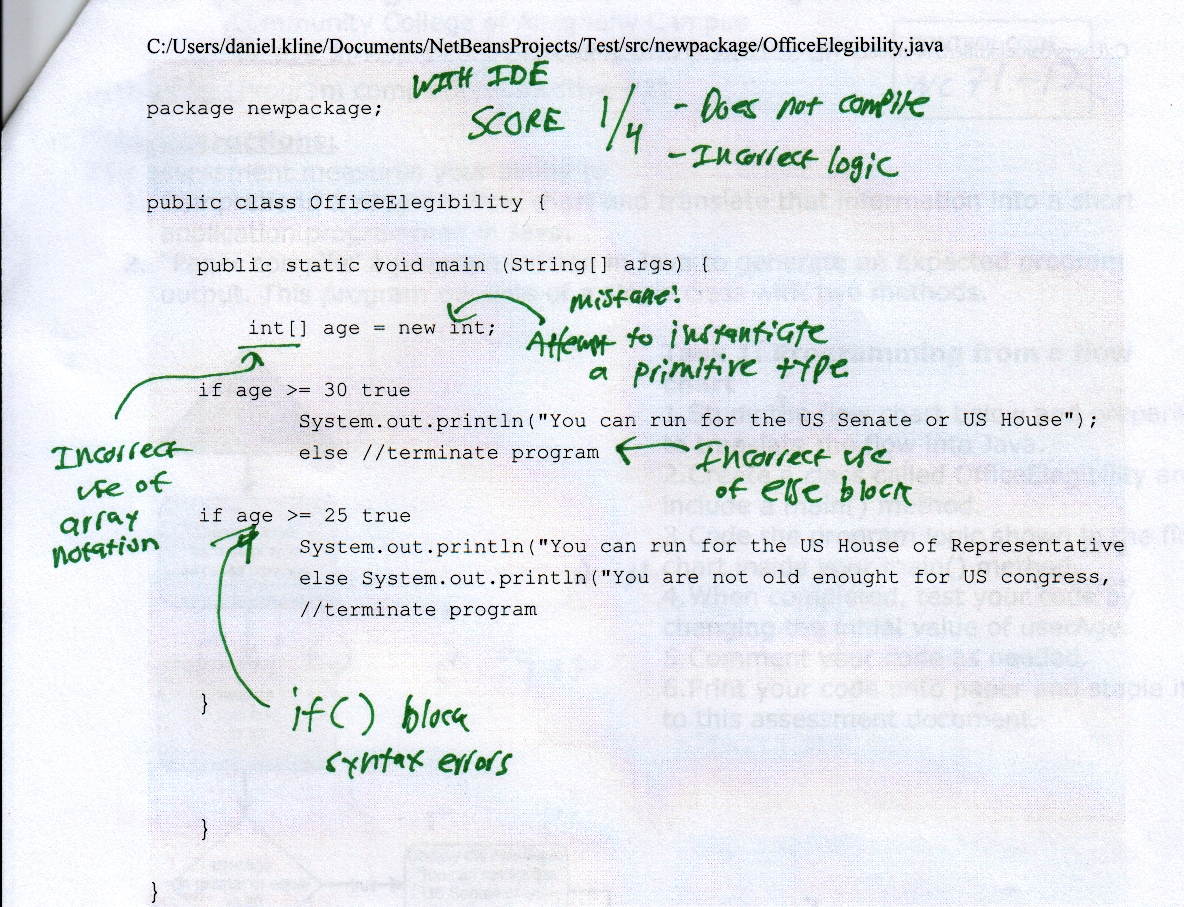

During scoring, representative samples of student responses for each level of proficiency were collected and annotated. Notes are written on each student work sample detailing specific instances of programming errors and successes. Remember that the core criteria for each scoring level is two-fold: 1) does the code compile and 2) does the program correctly implement the logic laid out in the specification flow chart.

Commentary on each proficiency level contains thoughts related to how that particular example of student work can inform changes to curriculum and skill practice exercises.

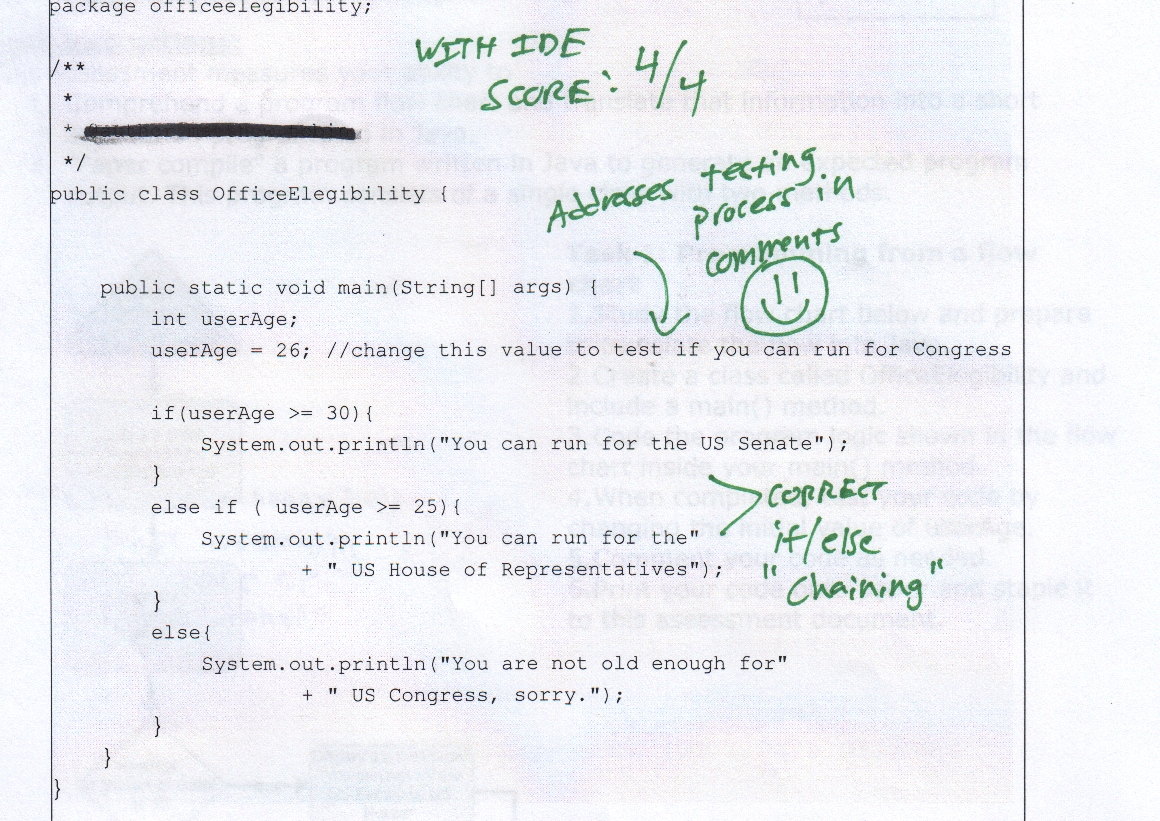

Score 4: Advanced Proficiency

Students scoring a 4 on this task revealed both mastery of basic logic implementation as well as careful attention to the way the Java code is structured and commented. In other words, their command of Java exceeds the proficiency level required to master the tested objectives.

In the work sample shown in Figure A, this student showed advanced proficiency by clearly noting in a comment how the initialized value of the int type variable age can be changed to test the decision logic implemented with chained if/else blocks of code.

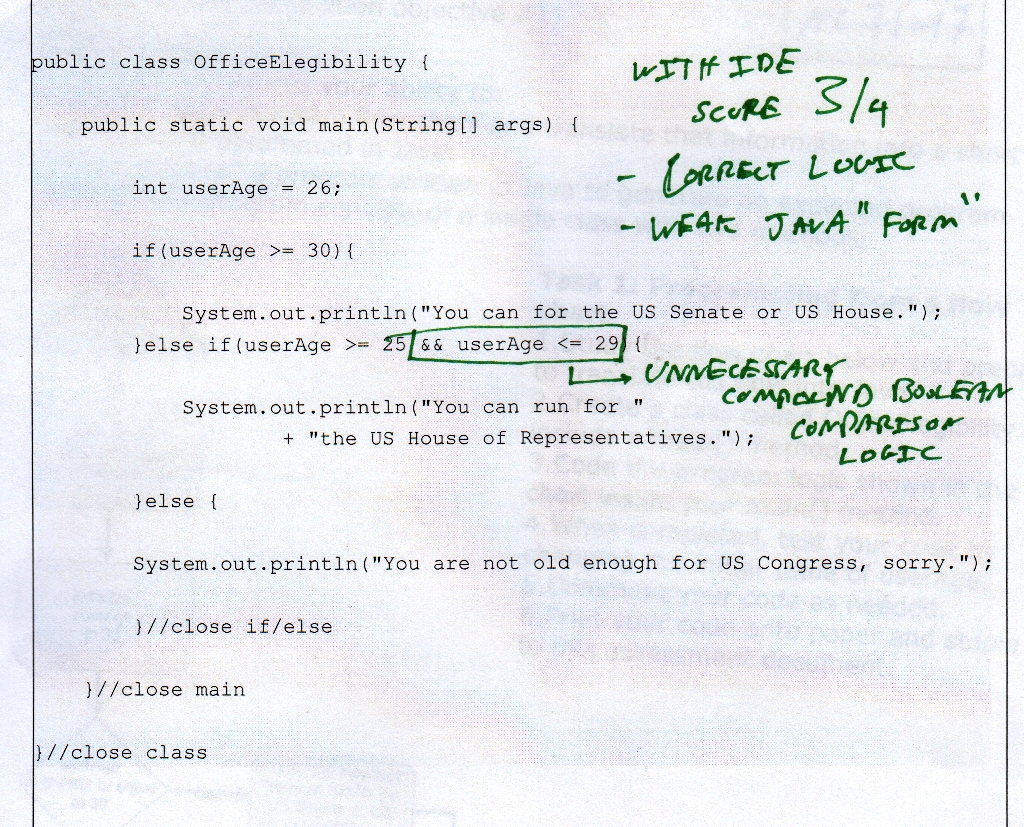

Score 3: Proficient

Students scoring a 3 on task 1 show command of the skills required to master the objectives this assessment tests. This particular student response compiles and produces the specified outputs based on varied inputs. A score of 4 was not awarded because evidence of careful attention to Java code best practices was not present.

For example, the annotations of the word sample show that the student used a compound logic check unnecessarily. Reducing extraneous test expressions is an important feature of well-written Java code. Programs should be coded with as much simplicity as possible to reduce occasion for errors and ease the comprehension of code by readers.

Planned course changes derived from instrument results

The core value of assessment is the insight the results provide into weaknesses in course design and exercise structure. The following sections explore improvements to CIT-111: Introduction to programming with Java that emerge from the above results

Course Improvement Idea 1: Focused practice on implementing decision logic specifications into Java

The core value many computer programs deliver lies in its ability to correctly and consistently apply decision logic which controls the flow of execution based on the decision results.

Student results suggest that the even the relatively simple logic required for task 1 proficiency challenges some students. Sections of CIT-111 in the Spring of 2017 could address this weakness in student skills by creating a series of exercises which ask students to implement decision logic laid out in both narrative form and in technical flow charts.

Sample logic implementation exercises

The following figures show a possible progression of increasingly complex logic implementation exercises. The focus of these tasks is to facilitate practice of the essential skill of creating Java applications that can consistently produce outcomes laid out in the program specifications.

As with most of the CIT-111 course exercises, practice items should vary in rigor in such a way that students with more advanced skills can participate in the same exercise as students with lower proficiency levels. This allows for students helping other students which produces even more robust skills on the part of the aiding students.

Course Improvement Idea 2: Create mini-lessons on unit testing procedures

Students who scored a 2 or below did not create logic in Java that correctly implemented the decision outcomes specified in the task's flow chart. The weakness in the logic could have been detected easily if the students completing the instrument had followed basic principles of code testing.

To address this weakness revealed in the test results, a few mini-lessons on testing best practices will be designed and administered at strategic points in the course. Possible curricular-objectives for these mini lessons might include the following:

- SWBAT digest the logic of a program and identify a range of test variable values which test each case the logic was designed to process correctly

- SWBAT test a logic block using strategically identified input values and adjust program code based on the results of the tests

Reflection on instrument design

The logic required for task 1 was relatively simple. As a result, 75% of students implemented each component correctly, 60% of which earned a top score of 4 indicating advanced proficiency. This is a promising result but also reveals a potential weakness in assessment design:

If more than half of students are performing at the highest possible level on the assessment, data on the limit of the student proficiency is lost because no finer graded scoring categories exist to rate higher levels of proficiency.

A possible change to the design of this task is to include a sub-logic block within one of of the main branches of decision logic that demands higher levels of coding skills. This would allow students of more limited proficiency a chance to implement logic at the desired threshold of proficiency while also challenging the students with more programming experience.

A natural downside to increasing the rigor of the instrument is that more students will complete the instrument with a sense that they were unable to finish the task correctly, which can be demoralizing. This can be addressed with an explanation of how the rigor of the task is graduated to address varying skill levels.

Task 2 Results: Paper compiling with method calls

Task instrument

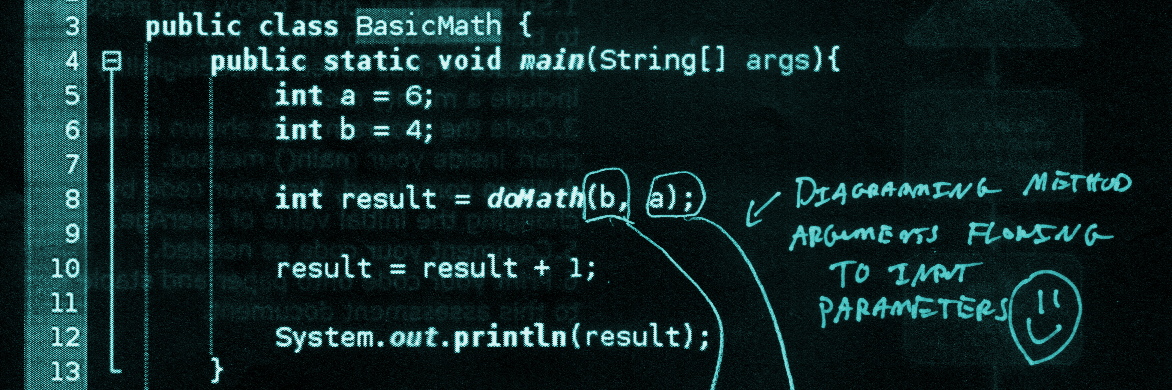

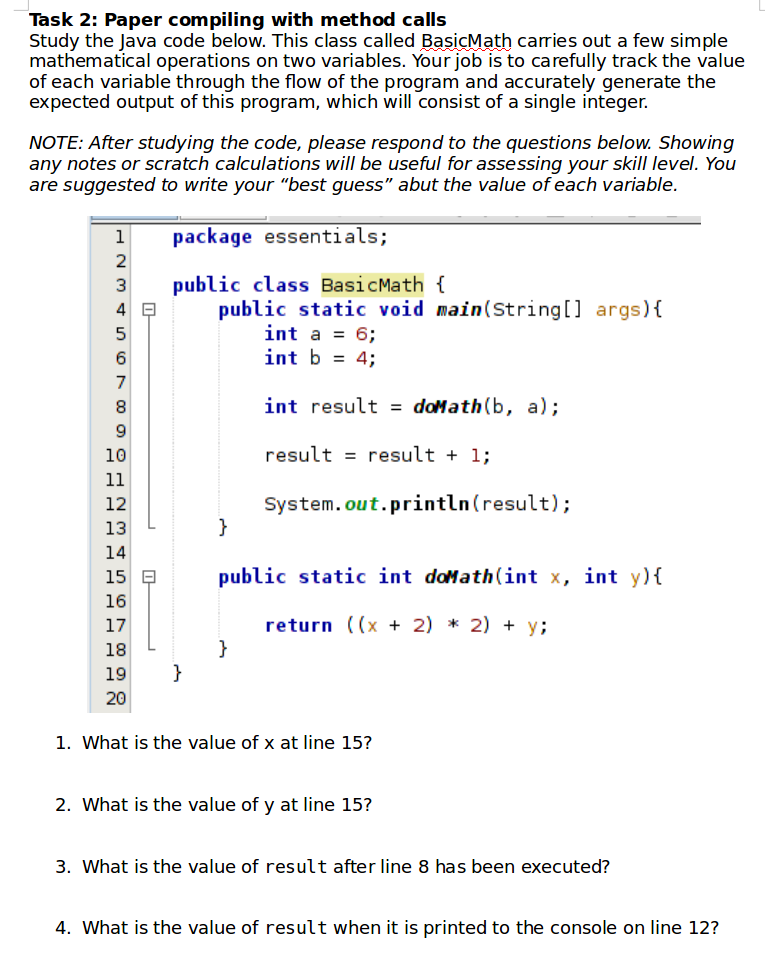

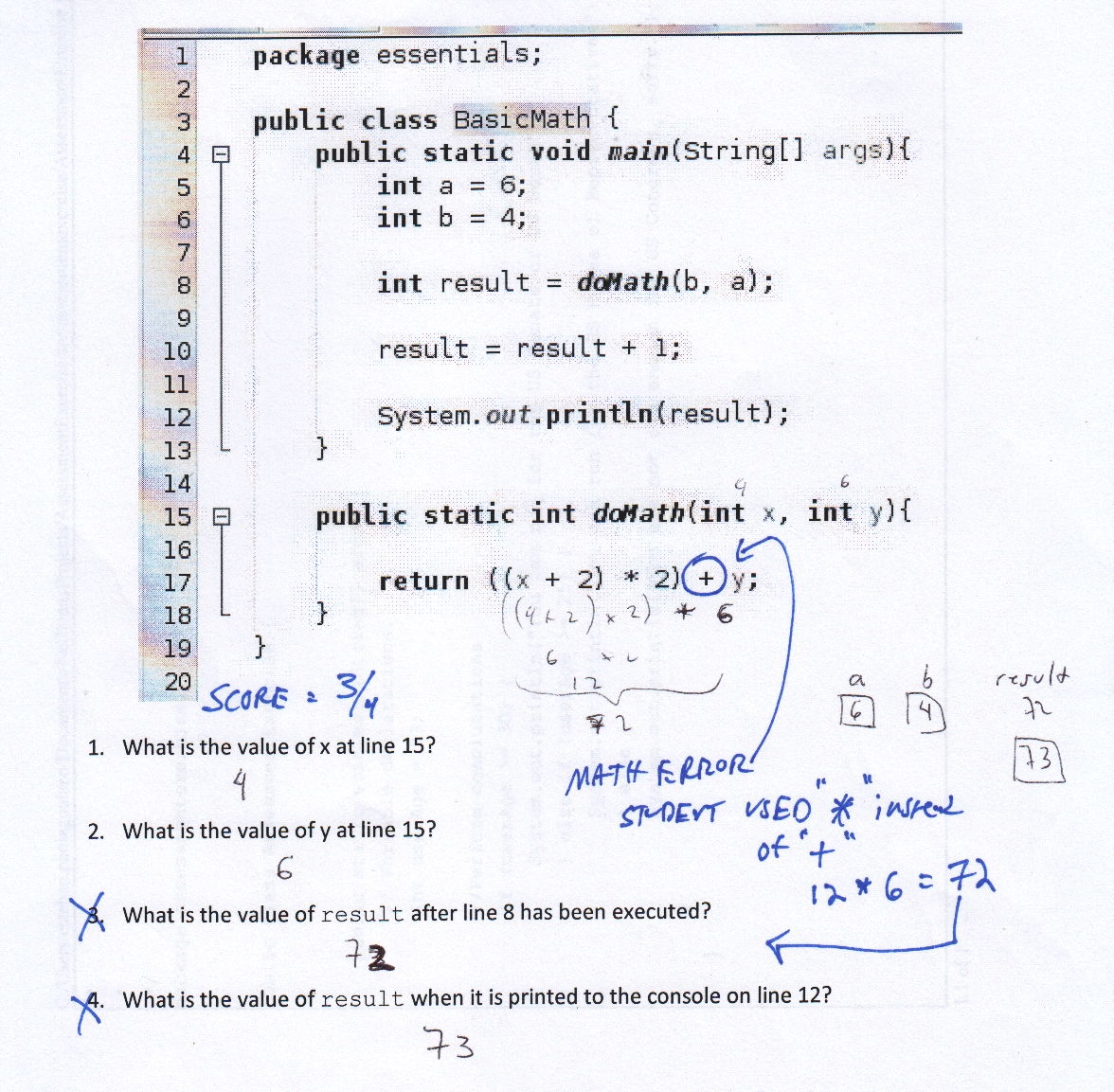

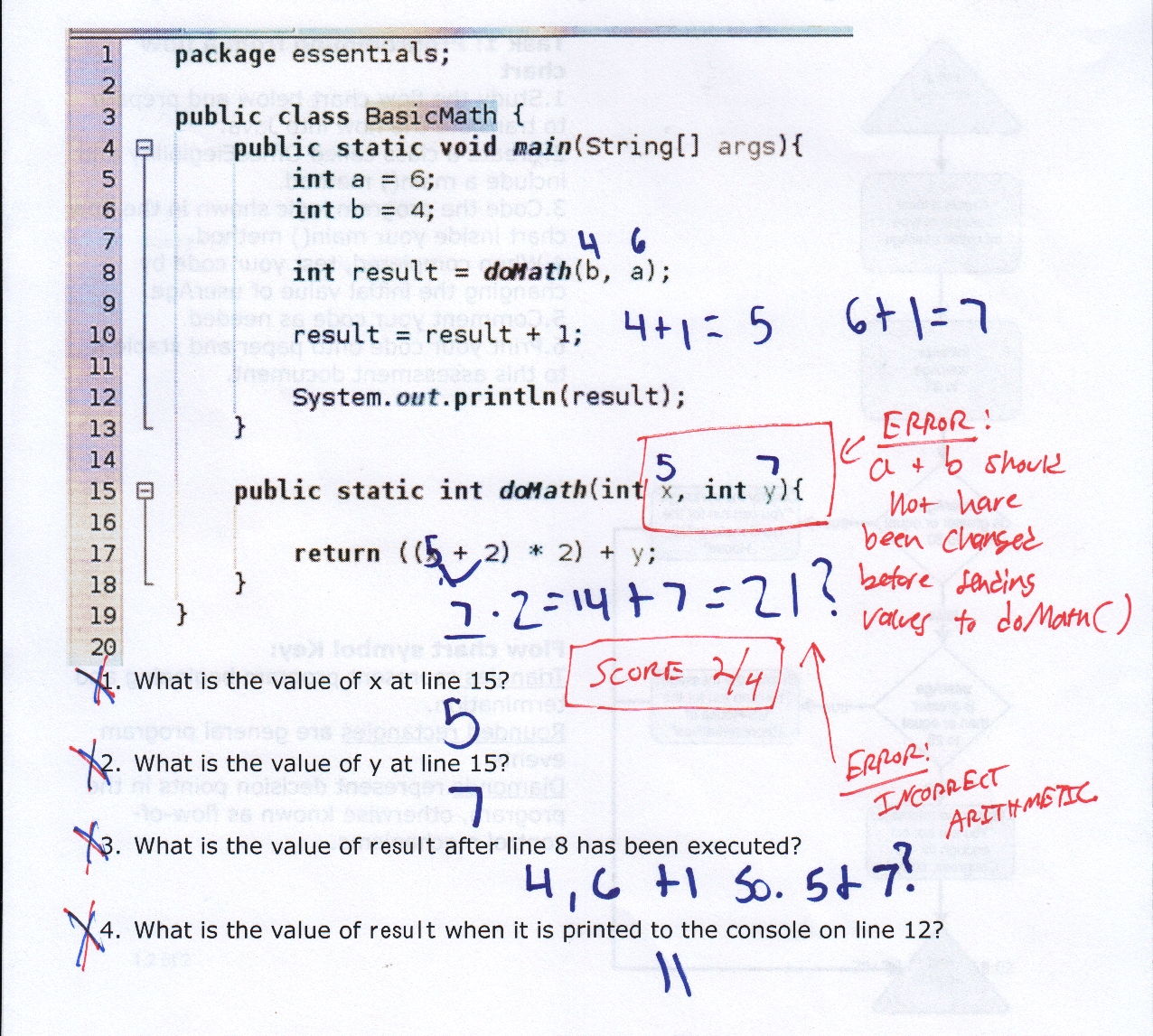

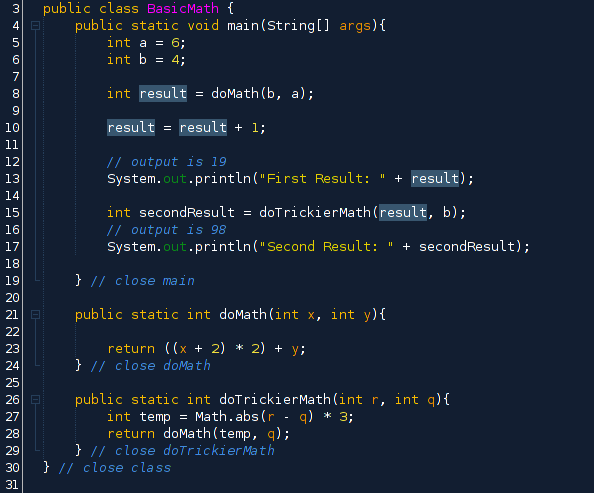

The following document clipping contains the student prompt for task 2. Students are provided a compilable Java class called BasicMath. Their task is to compute the value of program variables as they are initialized, passed to a method outside main called doMath() and returned to the calling method.

Knowledge and skill requirement breakdown

The following table contains curriculum-level objectives that back the skills which task 2 requires students to demonstrate. The objective are mapped to particular features of the task.

| Learning Unit | Relevant Objective | Manifestation in task 1 |

|---|---|---|

| Primitive type values | SWBAT determine the value of primitive type variables when they are initialized and as they are operated upon by various statements in code. | BasicMath creates two int type primitive variables a and b which are then passed to doMath and combined into a single int type response. Students must trace the value of these variables as they are passed into the method |

| Methods | SWBAT track the value of variables as they are transfered from within a calling method to a target method's input parameters. | Variables a and b are passed as arguments to doMath which stores the passed values in variables x and y. Student skills are rigorously tested by inverting the traditional order of passing the variable initialized first as the first argument to a method. |

| Methods | SWBAT call a method which requires values for input parameters and handle the returned value of a specified type. | The returned int value from doMath is stored in the int value result. Students must trace the value returned by the method as it is assigned to a third local variable. |

| Computations | SWBAT evaluate mathematical expressions which contain simple binary operators acting on variable values and evaluation precedence symbols. | doMath includes a single line of code which evaluates a mathematical expression including precedence symbols and returns the result. Students must correctly inject values into the expression's variables and correctly compute the result. |

Scoring rubric

The following rubric was developed to score task 2. Note that each scoring level is associated with a subjective label related to student proficiency. A score of 3 or 4 represents performance that demonstrates mastery of each of the curriculum-level objectives listed in the previous section.

| Proficiency Level | Scoring Criteria |

|---|---|

| 0 – Not attempted |

|

| 1 – Insufficient skills |

|

| 2 – Below proficient |

|

| 3 – Proficient |

|

| 4 – Advanced |

|

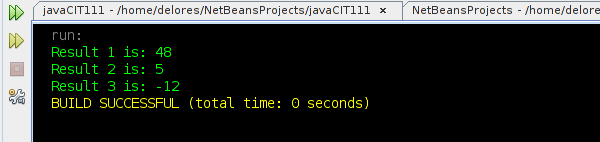

Aggregated assessment instrument results

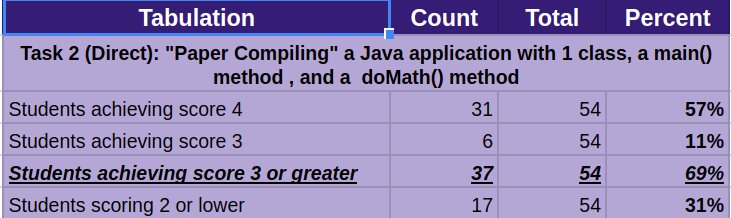

69% of students who completed task two scored a 3 or a 4, meaning proficient or advanced. This is 1% below the criteria originally set for meeting competence of 70%.

It is notable, however, that of the 69% who scored 3 or 4, 57% of those scored a 4, suggesting about half of the students are performing at a level of competence and confidence. Since more students 2 or lower than scored an even 3, we have a nearly bi-modal distribution that suggests that the cohort of students is "split" in ability: a substantial portion performing at a high level, and a chunk performing below proficient.

The results are summarized in the following table:

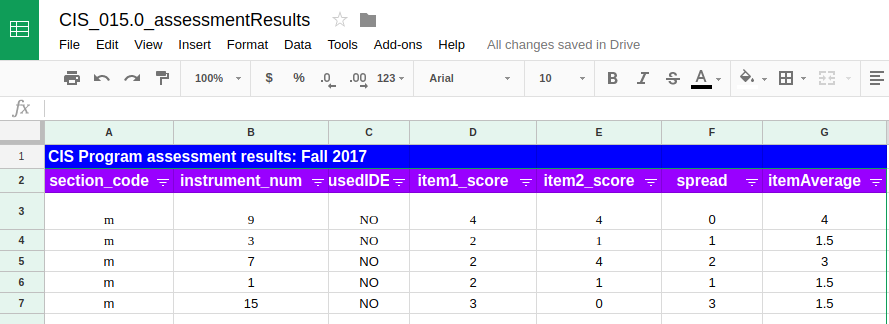

You can view and download the student-level assessment data by accessing this shared google drive spreadsheet:

Student work artifacts by score

Planned course changes derived from instrument results

75% of students performed at the level of "proficient" or "advanced" on task 2. However, their general performance suggests two core improvements to the CIT-111 content for Spring 2018:

- An injection of exercises to build confidence computing the values of expressions operating on variable values and precedence symbols

- Consistently offered coaching on "marking up" paper compiling challenges such that increasingly complicated logic can be simulated with lower chance of error than if computations were undertaking all in one's head or with disorganized jottings

Course Improvement Idea 1: Injection of math essentials exercises

10 of the students who completed this exercise showed evidence of a deficiency in executing basic math that operates on variable values. The guts of the doMath() method, for example, contained the expression:

((X + 2) * 2) + y

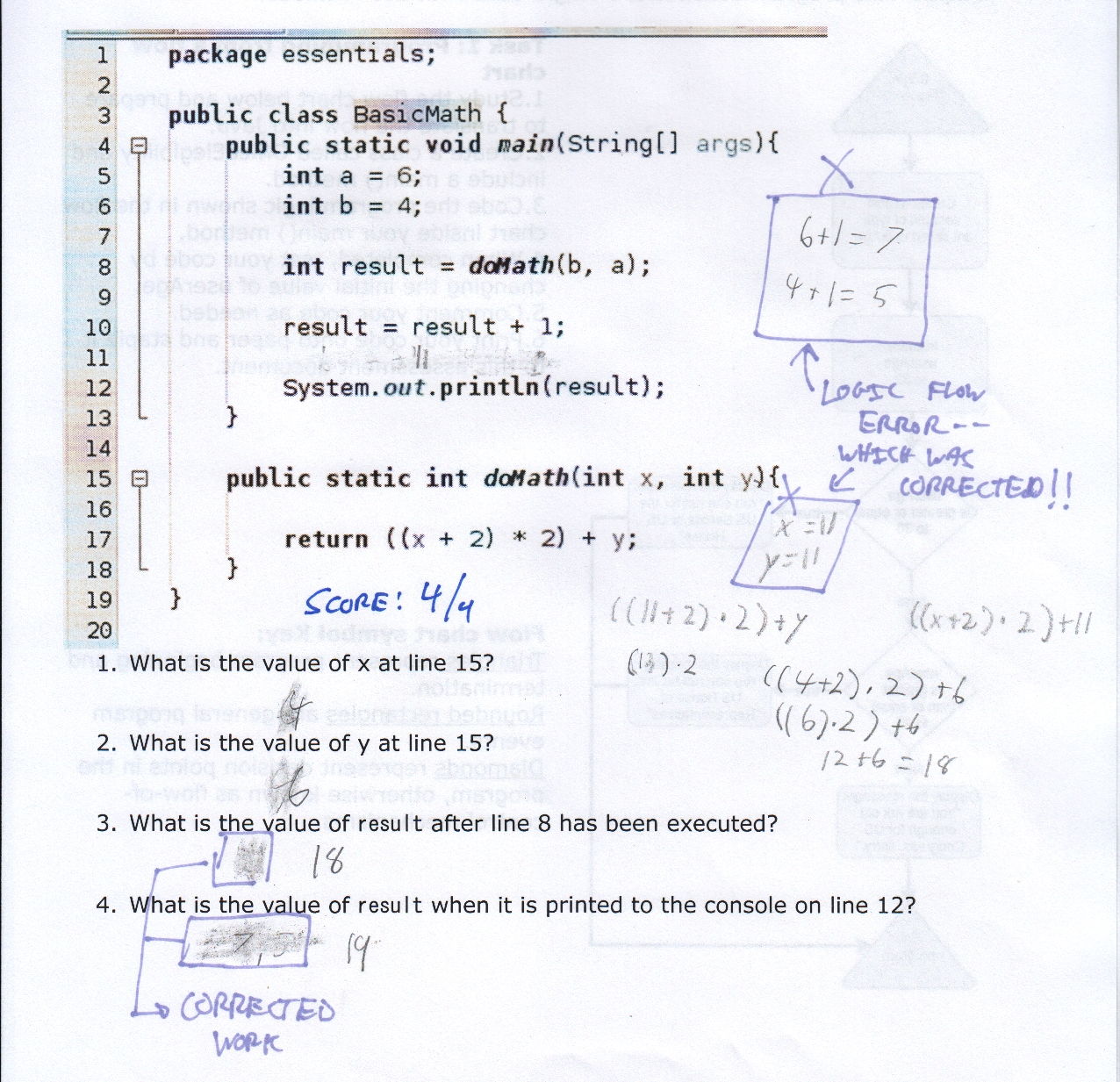

Figure J, for example, contains student works cored at a 2 level. This student made an attempt at executing the above expression, but made a basic math error.

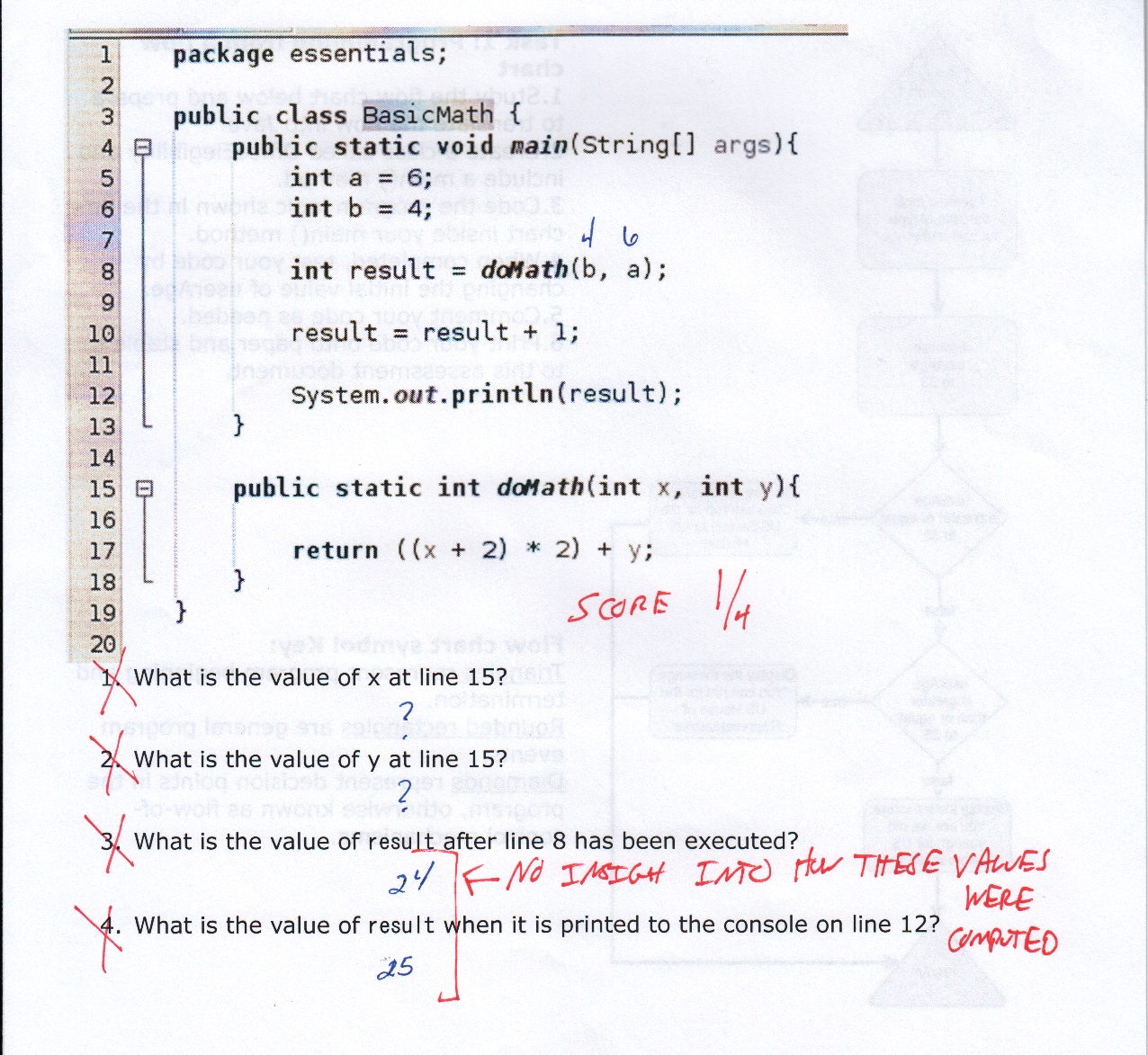

Several other students who also scored a 2 made notes on the assessment that showed errors related to this final computation. The student scoring a 1 in figure K also did not correctly compute the int return value passed back by doMath(). This student left fewer clues about their point of struggle, but the fact that they guessed a values that roughly correspond to

6 *4 = 24 and 24 + 1 = 25

This student work clue suggests struggling with the way the return expression should be evaluated.

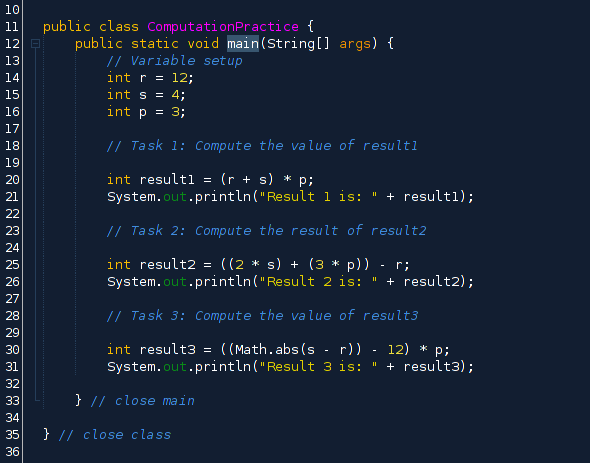

Sample student exercises

The following code snippet provides some "seed content" for a series of exercises that facilitate students practicing the skill of computing values using basic math operations. Note that the items in this little math quiz follow a pattern of less to increasing rigor. This allows students who are already confident in basic math computations to still be challenged by computing the outcome of more complex operations.

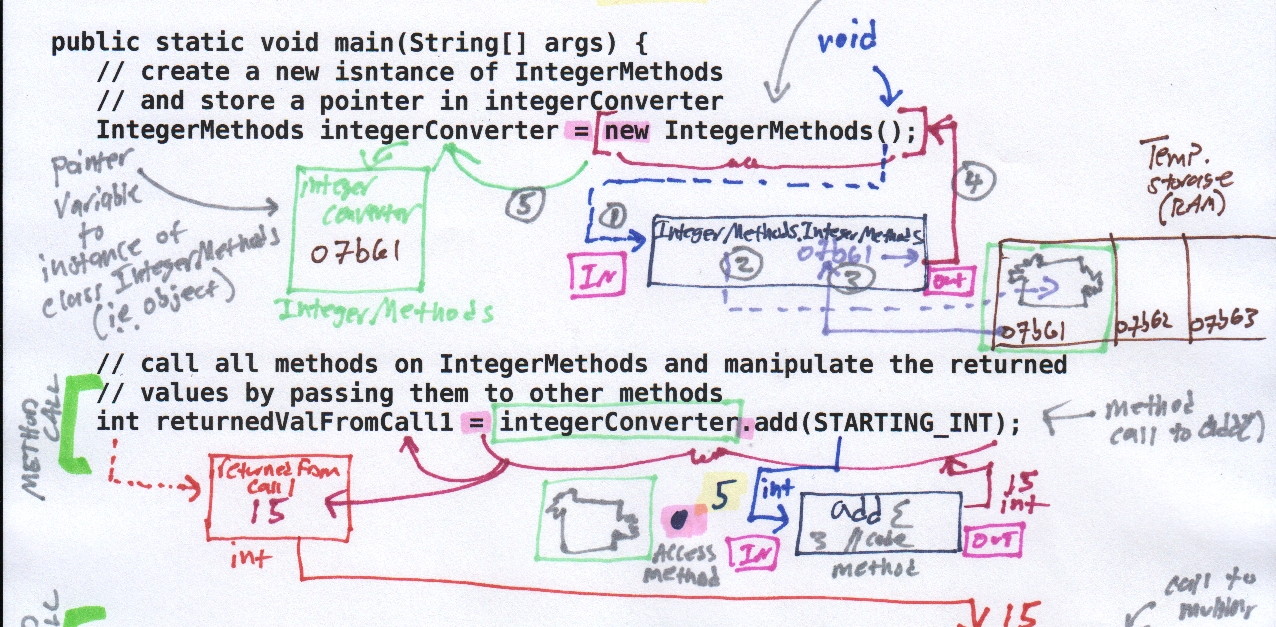

Course Improvement Idea 2: Paper computation notes coaching

The flow of variables a and b down to doMath() and back up to main() is a relatively straightforward life cycle for two variables. The lack of markings and careful tracking on a vast majority of test instruments suggests that students have not developed a robust habit of using "scratch" markings and diagrams on code they are compiling by hand.

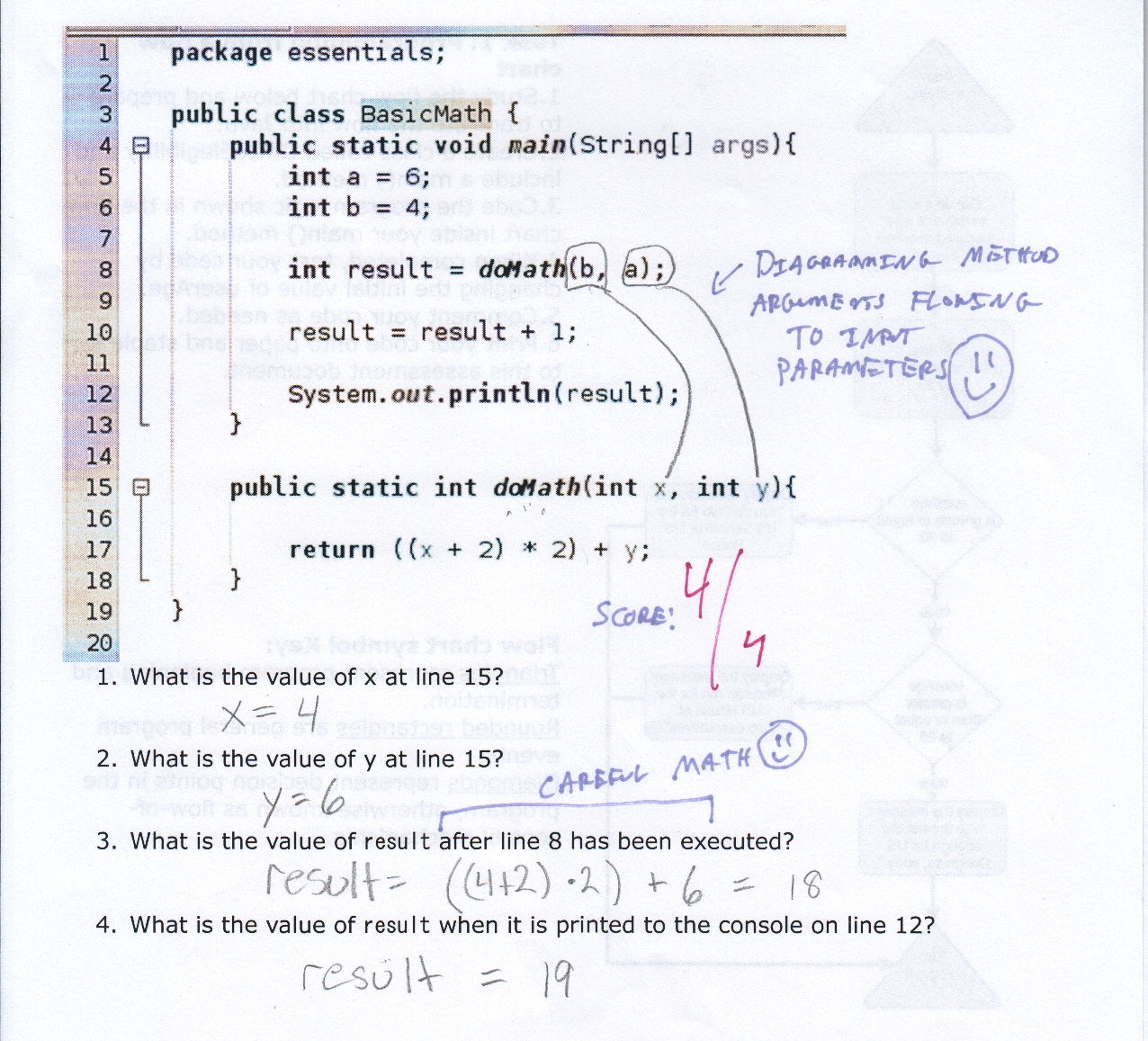

The only consistent marking made on instruments scoring a 3 or 4 were notes about the values of x and y after the method doMath() had been called on line 8. The student who scored a 2 shown in figure J, however, revealed strong habits in using note space to work through computations. Sadly, this student was unable to apply the math principles to compute the correct value of result following a return from doMath().

Paper compiling mini-lessons

A change in curriculum to address this shortcoming in student habits involved what one might term "mini-lessons" on best practice for marking up code to determine how the compiler will process it. Rather than devoting an entire lesson to this practice, students should be coached on how to mark up code through quick examples and test tasks given at various points throughout the course.

The following figure contains an example of a model approach to marking up a simple method and tracing how it manipulates input parameters and returns a single typed value:

Reflection on instrument design

Similar to comments made about improving task 1, this task could be improved by asking students to compute the output of an additional method to doMath() called something like doTrickierMath() which takes in parameters that are computed by doMath() or perhaps even calls doMath() from this third method.

The value of this addition is to increase the overall rigor of the instrument without making the initial questions more difficult. This is known as creating an assessment that "reveals understanding" of students at a wider range of proficiency levels.

The following code snippet shows the possible additional method in task 2:

This page was created on 11 Dec 17 by Eric Darsow. All content on this page may be reproduced, edited, and shared WITHOUT permission from the author or technologyrediscovery.net.